Students

Open Project Proposals

-

+

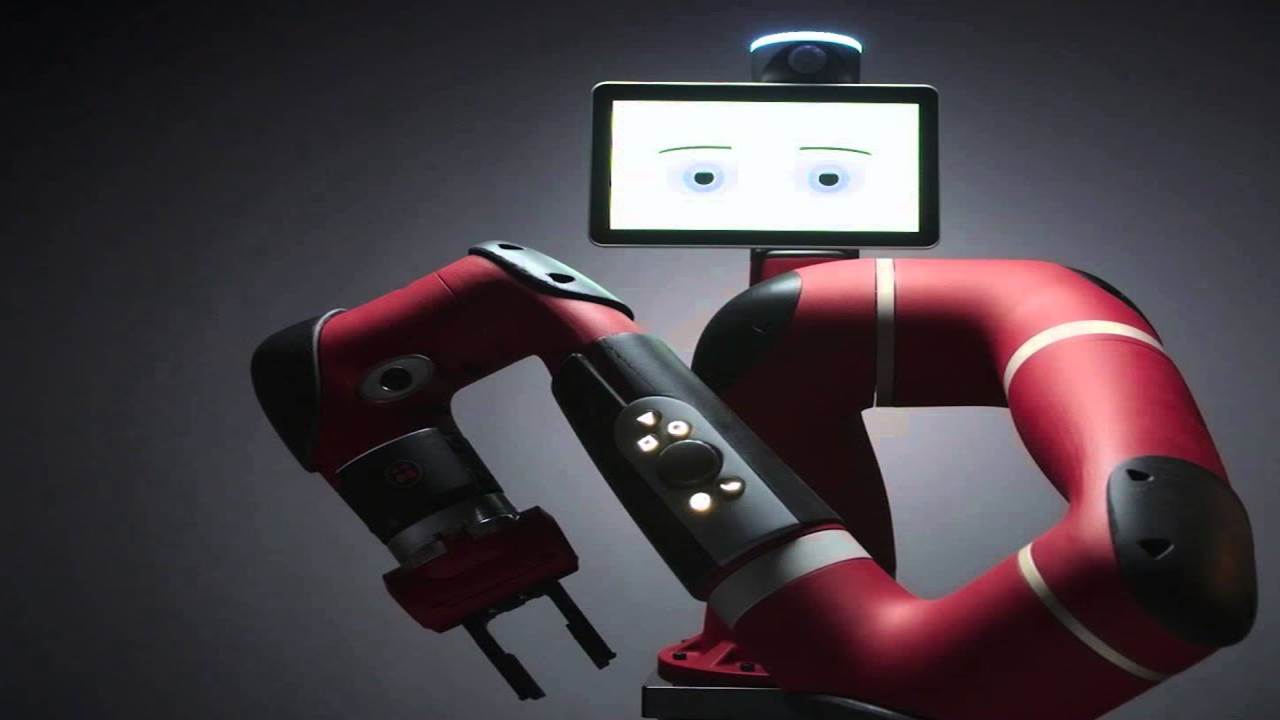

Adapting dynamic projections to user occlusion with a robot arm

Focus areas: Robotics, Interaction, Medialogy

Based on

- Hald K., Rehm M., Moeslund T.B. (2019) Testing Augmented Reality Systems for Spotting Sub-Surface Impurities. In: Barricelli B. et al. (eds) Human Work Interaction Design. Designing Engaging Automation. Springer, 103-112

Topics: Detection of occlusion (e.g. hand) and projection adjustment through robot arm

Project context: Innovationfonden project ACMP

Contact: Matthias Rehm

-

+

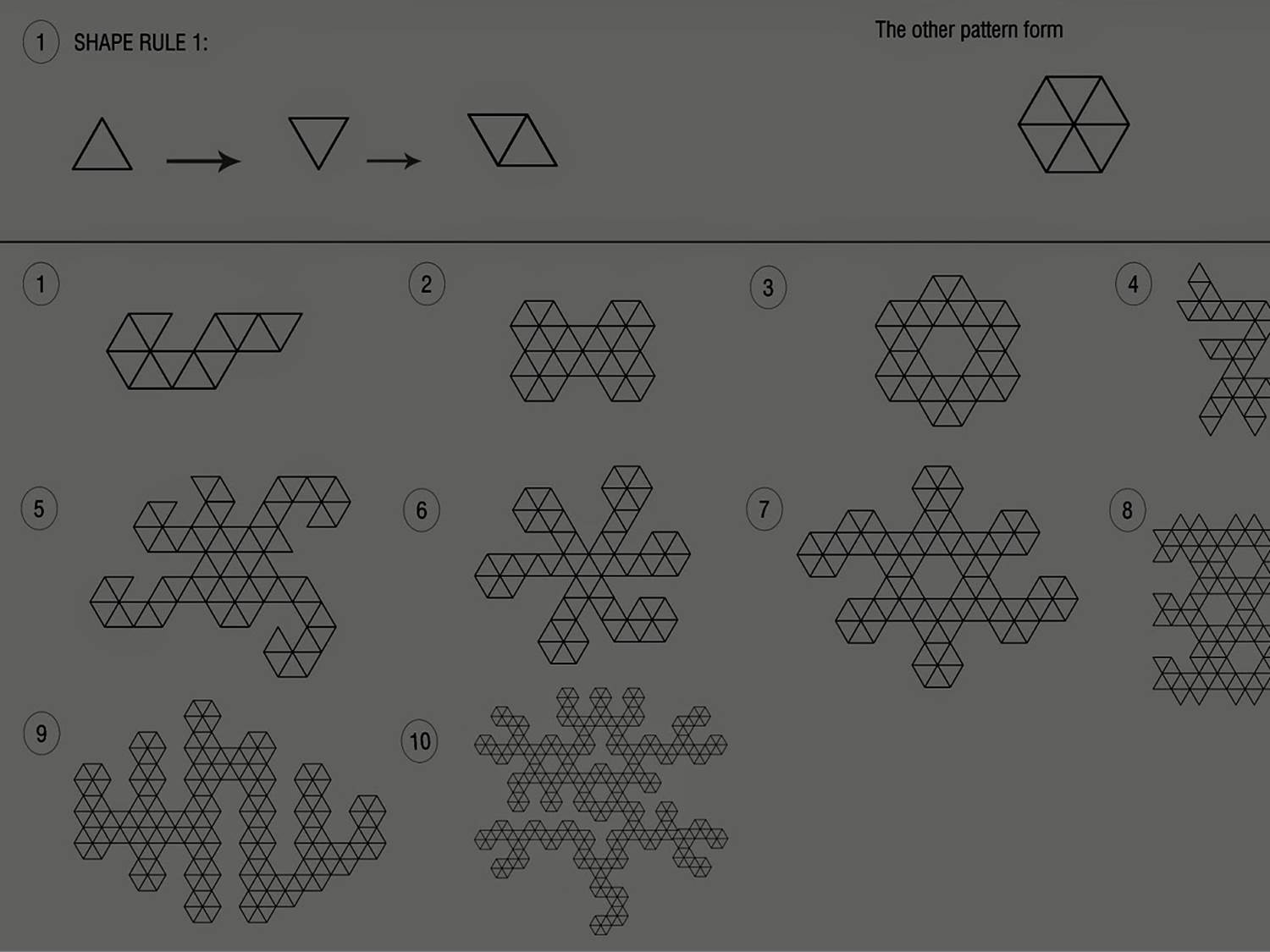

Parametric Approaches to Robot design

Focus areas: Robotics, Design, Graphics, Interaction, Medialogy

Based on

- Jun Kato and Masataka Goto. 2017. f3.js: A Parametric Design Tool for Physical Computing Devices for Both Interaction Designers and End-users. In Proceedings of the 2017 Conference on Designing Interactive Systems (DIS '17). ACM, New York, NY, USA, 1099-1110.

- David Jason Gerber, Shih-Hsin (Eve) Lin, Bei (Penny) Pan, and Aslihan Senel Solmaz. 2012. Design optioneering: multi-disciplinary design optimization through parameterization, domain integration and automation of a genetic algorithm. In Proceedings of the 2012 Symposium on Simulation for Architecture and Urban Design (SimAUD '12). Society for Computer Simulation International, San Diego, CA, USA

Topics: Extending shape grammar and similar parametric design approaches to incorporate technical requirements (sensors, services, interaction etc.)

Project context: Frederikshavn Kommune (Senhjerneskadecenter Nord)

Contact: Matthias Rehm

-

+

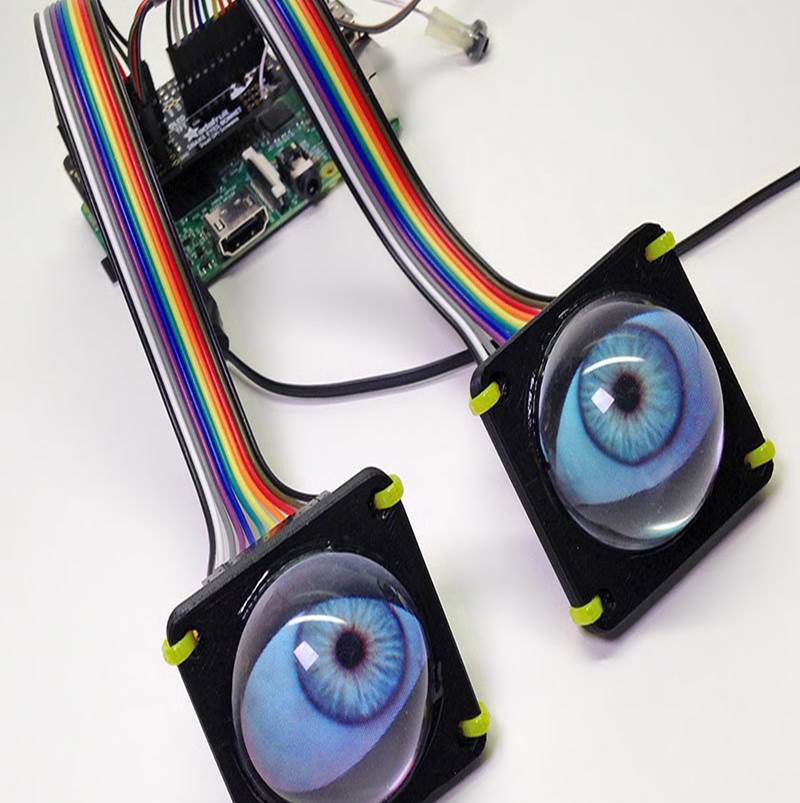

Using eyes as output in HRI

Focus areas: Design, Graphics, Interaction, Medialogy

Based on

- Frédéric Delaunay, Joachim de Greeff, and Tony Belpaeme. 2010. A study of a retro-projected robotic face and its effectiveness for gaze reading by humans. In Proceedings of the 5th ACM/IEEE international conference on Human-robot interaction (HRI '10). IEEE Press, 39-44.

- Tian (Linger) Xu, Hui Zhang, and Chen Yu. 2016. See You See Me: The Role of Eye Contact in Multimodal Human-Robot Interaction. ACM Trans. Interact. Intell. Syst. 6, 1, Article 2 (May 2016), 22 pages. DOI: https://doi.org/10.1145/2882970

Topics: Design of eyes and movement; gaze behavior in relation to functions in the interaction; use as nonverbal output channel

Project context: Frederikshavn Kommune (Senhjerneskadecenter Nord)

Contact: Matthias Rehm

Master Students

-

+

Alexander Schiller Rasmussen

Master thesis: Blind Tunnel Vision Narrowing the Field of Detection and Range of Electronic Mobility Aids: Narrowing the Field of Detection and Range of Electronic Mobility Aids

-

+

Anders Skaarup Johansen

Master thesis: Affect-Based Trust Estimation in Human-Robot Collaboration

-

+

Artúr Barnabás Kovács

Master thesis: Utilizing the Environment Through Play - A Study on a Mobile Learning Based Geometry Application

-

+

Balázs Gyula Koltai

Master thesis: Comparing Eye Tracker Input to Traditional Input for Measuring Player Performance, Immersion, and Engagement in an Emergent Narrative

-

+

Caros Gomez Cubero

Master thesis: Prediction of Choice Using Eye Tracking and VR

-

+

Casper Sloth Mariager

Master thesis: Using Machine Learning and Natural Language Processing for Manifesting Robot Characteristics in order to increase Long Term Engagement

-

+

Emil Bonnerup

Master thesis: Episodic User Adoption of Social Robots in Public Spaces

-

+

Ingeborg Goll Rossau

Master thesis: Application of Performance Accomodation Mechanisms in BCI-like Games to Reduce Frustration and Increase Perceived Control

-

+

Jedrzej Jacek Czapla

Master thesis: Application of Performance Accomodation Mechanisms in BCI-like Games to Reduce Frustration and Increase Perceived Control

-

+

Jesper Vang Christensen

Master thesis: Utilizing the Environment Through Play - A Study on a Mobile Learning Based Geometry Application

-

+

Jesper Wædeled Henriksen

Master thesis: Affect-Based Trust Estimation in Human-Robot Collaboration

-

+

Jorge Villa Yagüe

Master thesis: Learning player abilities based on multimodal data

-

+

Kresta Louise Febro Bonita

Master thesis: Giving people ideas about what to do with their fingers - signifying gestures on touch based devices

-

+

Laura-Dora Daczo

Master thesis: Giving people ideas about what to do with their fingers - signifying gestures on touch based devices

-

+

Lucie Kalova

Master thesis: Giving people ideas about what to do with their fingers - signifying gestures on touch based devices

-

+

Martin Viktor

Master thesis: Virtual Reality as a Test-bed for User Experience, collaboration with B&O

-

+

Milo Marsfeldt Skovfoged

Master thesis: Blind Tunnel Vision Narrowing the Field of Detection and Range of Electronic Mobility Aids: Narrowing the Field of Detection and Range of Electronic Mobility Aids

-

+

Mózes Adorján Mikó

Master thesis: Comparing Eye Tracker Input to Traditional Input for Measuring Player Performance, Immersion, and Engagement in an Emergent Narrative